From personal experience to an AAC product

Click2Speak was founded in 2016 with the vision of revolutionizing the way individuals with severe physical impairments communicate. Focused on its vision to empower people through a new on screen keyboard, Click2Speak works in close collaboration with end users and professionals to offer a cutting-edge augmentative alternative communication (AAC) tool. It all started in 2009 when Gal Sont (1976-2016), the driving force behind this inspiring project, was diagnosed with ALS (Amyotrophic lateral sclerosis, also known as Lou Gehrig’s disease).

Gal told his story in a short video that he prepared for an AAC workshop in 2016, and in an interview to the BBC click:

More from Gal’s:“…My ALS started in my right arm and hand. ALS is a disease of the nerve cells in the brain and spinal cord that control voluntary muscle movement. My arm, hand and fingers gradually weakened, and as I am right handed, in order to operate a regular PC mouse, I had to use an armrest mouse pad, practically using my shoulder for control. For mouse clicking, I used my leg pressing a button on the floor. As my disease progressed, controlling the mouse became very difficult and I began using alternatives such as a head mouse and eye gaze cameras…

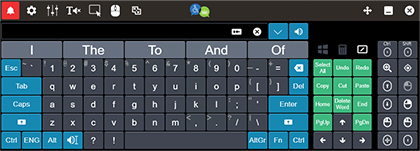

After being diagnosed with the disease, I contacted other individuals who suffer from ALS at different stages, and began to learn about the different challenges that I would face as my disease progressed. I also learned about the tech solutions they used to cope with these challenges. The most basic challenge was typing, which is done using a virtual on screen keyboard, a common solution shared by not only individuals affected by ALS, but a variety of illnesses such as brain trauma, MS and spinal cord injuries victims. The fully featured advanced on screen keyboards, proved relatively expensive, so I decided to develop the ultimate on screen keyboard on my own.

It quickly became apparent that using our keyboard enabled me to work faster and more accurately. Friends who used other solutions prior to ours were delighted with the results, albeit a small sample size. This started a new journey that introduced me to Swiftkey’s revolutionary technologies and how we customize them to our specific needs. I reached a first version of our keyboard and distributed it to friends who also suffer from ALS. They gave us invaluable feedback through the development process, and they all raved about its time saving capabilities and accuracy and how it makes their lives a little easier.

At this point I had my good friend Dan join me in this endeavor as I needed help with detail design, quality assurance, market research, and many other tasks. We formed ‘Click2Speak’, and we plan to make the world a better place! For real!”

Dan:

“…Gal and I have been best friends for almost 20 years. I have followed his amazing career as a senior developer and CTO. Gal was a true friend, and one of the brightest minds that I have ever met. A fearless and seasoned entrepreneur. Of course, I was devastated to hear about Gal’s illness. But Gal’s spirit during the tough struggle with ALS has never been broken. When Gal approached me with the idea of developing a superior on-screen keyboard for the benefit of people with special needs – I was extremely intrigued. I knew that I would love to join Gal in this journey to improve the way people around the world communicate. I also knew that nothing would stop Gal from reaching our goals, not even something ‘minor’ like a progressing ALS condition, I knew that Gal would move forward using his eyes, feet, whatever… when Gal had passion for something – nothing stopped him.

Unfortunately in May 2016 Gal passed away after a brave struggle with the disease, may he rest in peace, we are here to continue his inspiring legacy…”

Combined with any high performing level hardware like head/foot mice or even a standard PC mouse, our on screen keyboard software can help users overcome some of the communications difficulties caused by conditions like stroke/ALS/Cerebral Palsy/spinal cord injury, and make their PC input communication process faster, more accurate, and more fun.

In early 2016 we started collaborating with Google Org who decided to support our initiative with a generous grant, we have been also collaborating with Ezer Mizion, Israel’s largest medical nonprofit organization.